5PM.AI modernised their AI matchmaking engine to improve performance and scalability. The solution uses AWS serverless services, including Amazon Bedrock, OpenSearch, Lambda, and DynamoDB to deliver low-latency semantic search, match scoring, and personalised recommendations.

The Challenge

5PM.AI’s existing matchmaking engine required significant modernisation to meet growing performance demands. The platform, which helps professionals discover and prioritise valuable business relationships, faced challenges with query latency, limited scalability, and basic explainability of match results. The existing query parsing, semantic search, and candidate ranking workflows needed to be re-engineered to reduce latency, ensure results freshness, strengthen compliance, and provide transparent “why it matched” explanations to build user trust.

The Solution

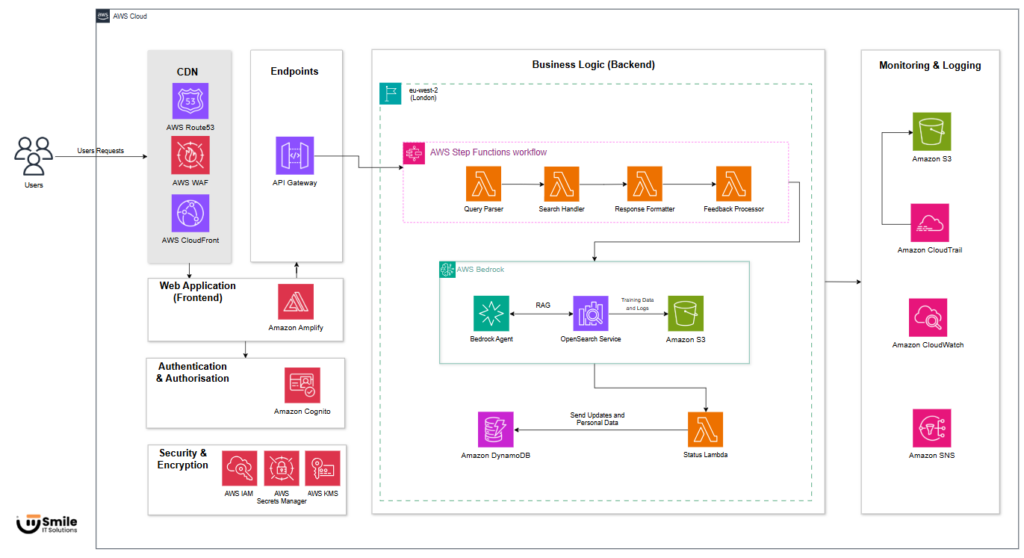

We implemented a modernised AI Engine on a serverless, cloud-native architecture using AWS managed services. Amazon API Gateway and AWS Lambda handle query orchestration, providing elastic scalability and reduced operational overhead. Amazon Bedrock foundation models (Nova Sonic, Titan Embeddings) power intent parsing and embedding generation, converting natural-language queries into structured attributes with greater accuracy.

Amazon OpenSearch Service provides millisecond-latency semantic vector search with advanced metadata filtering by industry, skills, and geography. Amazon DynamoDB stores user histories, feedback signals, and persona data, with Streams triggering adaptive re-ranking based on user interactions (thumbs up/down, skipped results).

Amazon S3 stores training datasets, match quality reports, and operational logs with lifecycle policies. Amazon CloudWatch and AWS CloudTrail provide real-time monitoring, observability, and audit trails. AWS Secrets Manager and AWS KMS ensure secure credential management and encryption of all sensitive data at rest and in transit.

The Results

- AI Engine modernised using AWS serverless and managed services, achieving up to 70% reduction in infrastructure provisioning time and 35–50% lower operational overhead compared to the legacy architecture through on-demand scaling and pay-per-use consumption.

- Query response latency reduced by approximately 60%, with average response times consistently below 300 ms under normal load and remaining under 500 ms during peak traffic periods, meeting real-time matchmaking performance requirements.

- Platform availability exceeds 99.95% uptime, enabled by multi-AZ managed services and fault-tolerant serverless components, ensuring continuous service delivery across production environments.

Lessons Learned

- Integrating Amazon Bedrock foundation models for intent parsing required careful prompt engineering to accurately convert natural-language queries into structured attributes across diverse professional networking contexts.

- Implementing Amazon OpenSearch Service for vector search highlighted the importance of proper embedding dimensionality and index configuration to achieve optimal millisecond-latency retrieval.

- Building the feedback loop mechanism with DynamoDB Streams enabled real-time adaptive re-ranking, but required thoughtful schema design to balance write throughput with query flexibility.

- The explainability layer proved essential for user trust, generating human-readable “why it matched” outputs significantly improved user engagement with search results.